Developing and testing autonomous vehicle technologies often involves working across a wide range of platform sizes — from miniature testbeds to full-scale vehicles — each chosen based on space, safety, and budget considerations. However, this diversity introduces significant challenges when it comes to deploying and validating autonomy algorithms. Differences in vehicle dynamics, sensor configurations, computing resources, and environmental conditions, along with regulatory and scalability concerns, make the process complex and fragmented. To address these issues, we introduce the AutoDRIVE Ecosystem — a unified framework designed to model and simulate digital twins of autonomous vehicles across different scales and operational design domains (ODDs). In this blog, we explore how the AutoDRIVE Ecosystem leverages autonomy-oriented digital twins to deploy the Autoware software stack on various vehicle platforms to achieve ODD-specific tasks. We also highlight its flexibility in supporting virtual, hybrid, and real-world testing paradigms — enabling a seamless simulation-to-reality (sim2real) transition of autonomous driving software.

The Vision

As autonomous vehicle systems grow in complexity, simulation has become essential for bridging the gap between conceptual design and real-world deployment. Yet, creating simulations that accurately reflect realistic vehicle dynamics, sensor characteristics, and environmental conditions — while also enabling real-time interactivity — remains a major challenge. Traditional simulations often fall short in supporting these “autonomy-oriented” demands, where back-end physics and front-end graphics must be balanced with equal fidelity.

To truly enable simulation-driven design, testing, and validation of autonomous systems, we envision a shift from static, fixed-parameter virtual models to dynamic and adaptive digital twins. These autonomy-oriented digital twins capture the full system-of-systems-level interactions — including vehicles, sensors, actuators, infrastructure and environment — while offering seamless integration with autonomy software stacks.

This blog presents our approach to building such digital twins across different vehicle scales, using a unified real2sim2real workflow to support robust development and deployment of the Autoware stack. Our goal is to close the loop between simulation and reality, enabling smarter, faster, and more scalable autonomy developments.

Digital Twins

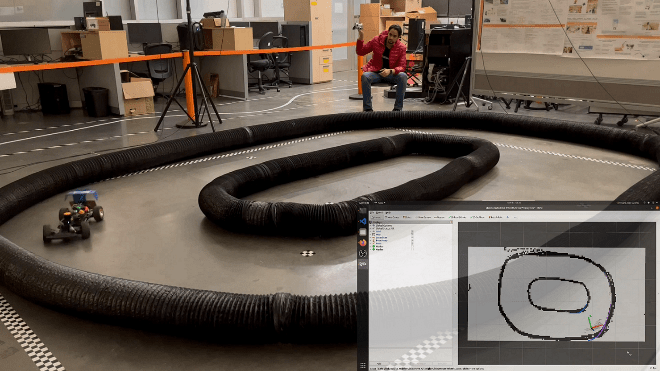

To demonstrate our framework across different operational scales, we worked with a diverse fleet of autonomous vehicles — from small-scale experimental platforms to full-sized commercial vehicles. These included Nigel (1:14 scale), RoboRacer (1:10 scale), Hunter SE (1:5 scale), and OpenCAV (1:1 scale).

Each platform was equipped with sensors tailored to its size and function. Smaller vehicles like Nigel and RoboRacer featured hobby-grade sensors such as encoders, IMUs, RGB/D cameras, 2D LiDARs, and indoor positioning systems (IPS). Larger platforms, such as Hunter SE and OpenCAV, were retrofitted with different variants of 3D LiDARs and other industry-grade sensors. Actuation setups also varied by scale. While the smaller platforms relied on basic throttle and steering actuators, OpenCAV included a full powertrain model with detailed control over throttle, brakes, steering, and handbrakes — mirroring real-world vehicle commands.

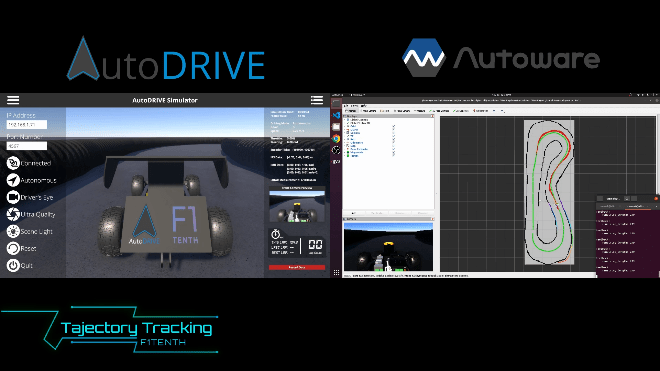

For digital twinning, we adopted the AutoDRIVE Simulator, a high-fidelity platform built for autonomy-centric applications. Each digital twin was calibrated to match its physical counterpart in terms of its perception characteristics as well as system dynamics, ensuring a reliable real2sim transfer.

Autoware API

The core API development and integration with Autoware stack for all the virtual/real vehicles was accomplished using AutoDRIVE Devkit. Specifically, AutoDRIVE’s Autoware API builds on top of its ROS 2 API, which is streamlined to work with the Autoware Core/Universe stack. It is fully compatible with AutoDRIVE Simulator as well as AutoDRIVE Testbed, ensuring a seamless sim2real transfer, without change of any perception, planning, or control algorithms/parameters.

The exact inputs, outputs, and configurations of perception, planning, and control modules vary with the underlying vehicle platform. Therefore, to keep the overall project clean and well-organized, a multitude of custom meta-packages were developed within the Autoware stack to handle different perception, planning, and control algorithms using different input and output information in the form of independent individual packages. Additionally, a separate meta-package was created to handle different vehicles viz. Nigel, RoboRacer, Hunter SE, and OpenCAV. Each package for a particular vehicle hosts vehicle-specific parameter description configurations for perception, planning, and control algorithms, environment maps, RViz configurations, API scripts, teleoperation programs, and user-convenient launch files for getting started quickly and easily.

Applications and Use Cases

Following is a brief summary of the potential applications and use cases, which align well with the different ODDs proposed by the Autoware Foundation:

- Autonomous Valet Parking (AVP): Mapping of a parking lot, localization within the created map and autonomous driving within the parking lot.

- Cargo Delivery: Autonomous mobile robots for the transport of goods between multiple points or last-mile delivery.

- Racing: Autonomous racing using small-scale (e.g. RoboRacer) and full-scale (e.g. Indy Autonomous Challenge) vehicles running the Autoware stack.

- Robo-Bus/Shuttle: Fully autonomous (Level 4) buses and shuttles operating on public roads with predefined routes and stops.

- Robo-Taxi: Fully autonomous (Level 4) taxis operating in dense urban environments to pick-up and drop passengers from point-A to point-B.

- Off-Road Exploration: The Autoware Foundation has recently introduced an off-road ODD. Such off-road deployments could be applied for agricultural, military or extra-terrestrial applications.

Getting Started

You can get started with AutoDRIVE and Autoware today! Here are a few useful resources to take that first step towards immersing yourself within the Autoware Universe:

- GitHub Repository: This repository is a fork of the upstream Autoware Universe repository, which contains the AutoDRIVE-Autoware integration APIs and demos.

- Documentation: This documentation provides detailed steps for installation as well as setting up the turn-key demos.

- YouTube Playlist: This playlist contains videos right from the installation tutorial all the way up to various turn-key demos.

- Research Paper: This paper can help provide a scientific viewpoint on why and how the AutoDRIVE-Autoware integration is useful.

What’s Next?

PIXKIT 2.0 Digital Twin in AutoDRIVE

We are working on digitally twinning more and more Autoware-supported platforms (e.g., PIXKIT) using the AutoDRIVE Ecosystem, thereby expanding its serviceability. We hope that this will lower the barrier of entry for students and researchers who are getting started with the Autoware stack itself, or the different Autoware-enabled autonomous vehicles.